Summary: Who wouldn’t want to improve the transportation status quo? But we’re looking at self-driving car safety in the wrong way. Self-driving cars will also lead to an increase in systemic risk, shifting some gains in safety. Over the next decade or so, there will be more serious discussions on autonomous vehicle implementations. Based on the way these companies have framed early public discussions I worry that people will look at risk in unhelpful ways.

A recent paper titled “Self-Driving Vehicles Against Human Drivers: Equal Safety Is Far From Enough” measures public perception in Korea and China. Since I’ve written about self-driving cars or autonomous vehicles (AVs) a few times I wanted to comment on it and ways to look at risk in a new system.

The paper outlines studies estimating how much safer AVs need to be for the public to accept them. The authors estimate that AVs need to be perceived as 4 to 5 times safer to match the trust and comfort people have with human-driven vehicles.

I’m going to go through a few parts of the paper and tell you why I think the findings aren’t relevant to the AV discussion (though they are interesting).

What Metrics Matter?

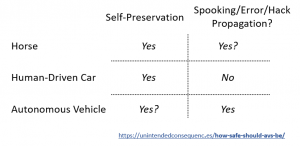

Optimization vs systemic risk. AVs bring up lots of ways to fall into this system trap, something I’ve written about before in Autonomous Vehicles and Scaling Risk. Think of the dangerous sides of human driving. Humans drive tired, under the influence, and see poorly in the dark. They speed to cut travel time and waste hours of their lives in cars. Why would anyone want to maintain the status quo?

If we merely look at ways to optimize the current state, there are many. Just start with the above shortlist. AVs that don’t have any of those bad human behaviors should lead to fewer road accidents leading to fewer lost lives.

But the optimization approach ignores the overall systemic risk added by AVs. Let’s look at some potential outcomes even when AVs are 4 to 5 times safer than human drivers.

These examples are different from other safety policy changes that act on individuals. For example, creating speed limits, mandating seat belts, improving the road surface, and installing better lighting.

AVs can be safer and yet the whole system can be riskier.

Average is not a good measure. Likewise, AVs can be on average safer and shift danger elsewhere in the system. There are many ways to come up with the same average safety. Consider a simple example of 100 accidents a day on each of every 30 days vs no accidents for 29 days and then 3000 accidents on day 30. Same average, different reality.

We should be more interested in extremes. I don’t think we are considering the potential peaks on the graphs above.

Systems for spreading. Comparing measured safety of AVs to human-driven cars is like comparing COVID to cardiac arrests: the comparison ignores the potential for a problem to spread. For human-driven cars (and heart attacks) bad driving (or bad health choices and genetics) generally do not spread to others. But for AVs (or COVID) bugs or hacks (or sneezes) do spread the problem around. What might look safer can change quickly.

In recent months major organizations have been hacked (Garmin, Twitter, Solarwinds, etc). Those reminders should temper our trust in networked transportation AI.

Single car vs fleet. There is a difference between someone riding in a single self-driving car and fleets of self-driving cars making up the majority of road traffic. Just as someone may feel safer (or be safer) carrying a gun, if everyone carries one then the system changes and everyone may become less safe.

Changing human driver behavior. As noted by researchers at the University of Cambridge running an AV simulation, “when a human-controlled driver was put on the ‘road’ with the autonomous cars and moved around the track in an aggressive manner, the other cars were able to give way to avoid the aggressive driver, improving safety.” If human drivers can game the system and manipulate AV behavior, will they create more accidents as a result?

This is preliminary research, but claims that AVs can improve traffic by 35% also don’t consider how transportation demand and driver behavior changes as a result. We’ve seen this in legacy infrastructure that prioritizes driving over pedestrians. As more space is given to cars, traffic at first improves, followed by more demand for transportation, ultimately leading to worse traffic.

Changing pedestrian behavior. Similarly, pedestrians may change their behavior, at least in some locations. I call this the New York City limo effect. When I lived in NYC it was normal to see a pedestrian slap the back of a limo in traffic. AVs tend to be noticeable too. Do pedestrians start to taunt cars? Try to be hit to sue the well-funded companies? Ignore traffic since the self-driving cars will always stop?

What are the intermediate steps? The danger of mixed AV and human-driven cars might be greater than that of only human-driven or only AVs. What happens in the interim? An option could include keeping AVs on separate lanes or roads. Do we ever reach the point at which no human drivers are allowed? I expect that just as there are special interests for AVs there will also be special interests for human-driven vehicles. After all, we still have slow-moving horse-and-buggy drivers and people need to modify their driving around them.

Asking vs observing preferences. How survey respondents respond to survey questions about their future behavior is different from observing their actual behavior. In the earlier survey, some participants had no knowledge of AVs before responding. Should we ask people what they would do in a future state? People tend to be bad at that type of prediction. The problem is also that there are limited options for people to take AVs today.

Impact of medical care on car accident fatality rate. As we saw in an earlier post (Is the World Getting Safer?) just looking at fatality rates can tell a misleading story. Better medical care reduces fatalities while there may be just as many life-threatening accidents. If AVs improved our ability to deliver medical care by alerting an ambulance after an accident we might have better outcomes even with more accidents. But which metric should matter more — patient outcomes or the number of patients created?

Banal harm. This goes to the assumed improvements AVs bring to other aspects of driving. Do these hold up?

- Traffic: assumed to be better. Or does demand for transportation increase, leading traffic to get worse? This is what we already see when we add more roads.

- Pollution: assumed to improve due to more efficient driving. Or does it get worse because there is more driving?

- Pricing: assumed to be lower from eliminating human drivers and more efficient driving. Or do higher tech, insurance, legal requirements, as well as demand, drive prices up?

Ethics by Survey

How can we avoid making irreversible decisions where people are shocked at bad outcomes? There are a few steps.

Consider who makes the decision? If we use public opinion surveys to make the decision, we risk the following:

- People from the past determine future outcomes. But they don’t know what the future will be like.

- People from one location determine outcomes elsewhere. Their local situations and appreciation of risk may be different.

- People used to a human-driver system make decisions about a different and theoretical self-driving system. They aren’t able to compare the two before experiencing them both.

Does the popular story of support come from the industry that would benefit? A recent example is the $200 million spent to support a Yes vote in California on Proposition 22 (on whether rideshare drivers are employees or contractors). The companies benefitting from Prop 22 controlled the narrative (“it’s about personal choice”). Is the same happening with AVs (“it’s about safety”)?

Consider scaling risk. As I wrote in Autonomous Vehicles and Scaling Risk

“As a thought experiment, instead of decreasing, what would it take to increase traffic deaths per capita? With traditional cars, forcing automotive deaths to increase would be nearly impossible. It would take a strange coordinated, long-term purposeful political and corporate attack to eliminate safety regulations…. But it would not be possible at scale to force or trick individual drivers into killing each other.”

Ethics by Survey. There’s a problem when we try to assign values to different choices AVs must make. “The Moral Machine Experiment,” attempts to look at what it means when AVs make life or death decisions.

Play the Moral Machine and you’ll be put into many unusual scenarios, like what should the AV do: continue straight and kill a man and a homeless person or swerve and kill a male executive and a woman?

Results vary around the world. The exercise might not be a total waste of time, but it is mostly one.

Instead, what could we do to mitigate the risks above?

Consider

- Could we limit risk and much of the ethical discussion by designating AV-only lanes, separated from human traffic? Or limiting AVs to non-human cargo?

- Apart from Uber and Lyft, many other companies have AV initiatives, including Amazon, Apple, BMW, Baidu, Cisco, Didi Chuxing, Ford, GM, Honda, Tesla and more. With that many companies investing, they are going to influence the dialog.

- Industry proponents market AVs as a virtue, with support from groups including MADD (Mothers Against Drunk Driving) and the National Federation of the Blind.

- Industry groups state that their goals are the safe implementation of driverless technology. Rather than support driverless tech, what if we instead supported the supposed outcomes of AVs, like lower pricing, lower pollution, lower congestion?

- How do we avoid building a one-way system road? Is it possible to reverse from an AV majority or AV exclusive road system?

Thank you to Caleb Ontiveros, Dan Stern, Étienne Fortier-Dubois, and Tom White for reading an earlier draft of this article.