Peter Drucker said “if you can’t measure it you can’t improve it,” but he didn’t mention the second-order effects of that statement. What changes after people get used to the measurements? What if we measure things that are only partly relevant to what we’re trying to improve?

Tracking metrics can tell us something new, but can also create problems. Let’s look at how Goodhart’s Law leads to unintended consequences.

A New Morality of Attainment

Goodhart’s original quote, about monetary policy in the UK (of all things) was:

“Any observed statistical regularity will tend to collapse once pressure is placed upon it for control purposes.”

But Goodhart’s original is often reinterpreted so that we can talk about more than economics. Anthropologist Marilyn Strathern, in “Improving Ratings: Audit in the British University System,” summarized Goodhart’s Law as:

“When a measure becomes a target, it ceases to be a good measure.”

This is the version of the law that most people use today.

Other commonly used variations include the Lucas Critique (from Robert Lucas’s work on macroeconomic policy):

“Given that the structure of an econometric model consists of optimal decision rules of economic agents, and that optimal decision rules vary systematically with changes in the structure of series relevant to the decision maker, it follows that any change in policy will systematically alter the structure of econometric models.”

And also Campbell’s Law:

“The more any quantitative social indicator is used for social decision-making, the more subject it will be to corruption pressures and the more apt it will be to distort and corrupt the social processes it is intended to monitor.”

I’m going to stick with Strathern’s description because its simpler and more people seem to know it.

Strathern wrote about the emergence, in Cambridge in the mid 1700s, of written and oral exams as a way to rate university students.

Those students’ results were supposed to show how well the students learned their material, but also showed how well the faculty and university were doing. That is, how well the students had learned the subject matter, how well the professors taught, and the quality of the universities.

But to determine how well students, professors, and universities had performed, the exams couldn’t be graded in traditional, qualitative ways. They needed a way to rank the students.

“This culminated in 1792 in a proposal that all answers be marked numerically, so that… the best candidate will be declared Number One… The idea of an examination as the formal testing of human activity joined with quantification (that is, a numerical summary of attainment) and with writing, which meant that results were permanently available for inspection. With measurement came a new morality of attainment. If human performance could be measured, then targets could be set and aimed for.”

Strathern also described the difficulties of the rankings.

“When a measure becomes a target, it ceases to be a good measure. The more examination performance becomes an expectation, the poorer it becomes as a discriminator of individual performances. [T]argets that seem measurable become enticing tools for improvement…. This was articulated in Britain for the first time around 1800 as ‘the awful idea of accountability’….”

Again from Strathern’s paper:

“Education finds itself drawn into the rather bloated phenomenon I am calling the audit culture… The enhanced auditing of performance returns not to the process of examining students, then, but to other parts of the system altogether. What now are to be subject to ‘examination’ are the institutions themselves—to put it briefly, not the candidates’ performance but the provision that is made for getting the candidates to that point. Institutions are rendered accountable for the quality of their provision.

“This applies with particular directness in Teaching Quality Assessment (TQA), which scrutinizes the effectiveness of teaching—that is, the procedures the institution has in place for teaching and examining, assessed on a department by department basis within the university’s overall provision…. TQA focuses on the means by which students are taught and thus on the outcome of teaching in terms of its organization and practice, rather than the outcome in terms of students’ knowledge. The Research Assessment Exercise (RAE), on the other hand… specifically rates research outcome as a scholarly product. Yet here, too, means are also acknowledged. Good research is supposed to come out of a good ‘research culture’. If that sounds a bit like candidates getting marks for bringing their pencils into the exam, or being penalized for the examination room being stuffy, it is a reminder that, at the end of the day, it is the institution as such that is under scrutiny. Quality of research is conflated with quality of research department (or centre). 1792 all over again!”

Measuring the quality of teaching (or quality of research) rather than what students learn (or research results) is an odd outcome. But it makes sense. Adherence to a process seems related to the goals, so the process becomes what’s measured.

John Gall, a popular systems writer, also outlined something like this in his book Systemantics. Here he describes how a university researcher gets pulled into metrics-driven work.

“[The department head] fires off a memo to the staff… requiring them to submit to him, in triplicate, by Monday next, statements of their Goals and Objectives….

“Trillium [the scientist who has to respond] goes into a depression just thinking about it.

“Furthermore, he cannot afford to state his true goals [he just likes studying plants]. He must at all costs avoid giving the impression of an ineffective putterer or a dilettante…. His goals must be well-defined, crisply stated, and must appear to lead somewhere important. They must imply activity in areas that tend to throw reflected glory on the Department.”

Universities may have started our the focus with metrics, but today we see metrics used all the time. Here are some examples of metrics used to achieve goals and the problems they created.

Some Inappropriate Metrics

Robert McNamara and the Vietnam War. Vietnam War-era Secretary of Defense Robert McNamara was one of the “Whiz Kids” in Statistical Control, a management science operation in the military. McNamara went on to work for Ford Motor Company and became its president, only to resign shortly afterward when Kennedy asked him to become Secretary of Defense.

In his new role, McNamara brought a statistician’s mind to the Vietnam War, with disastrous results. People on his team presented skewed data, put them in models that told a desired story, and couldn’t assess more qualitative issues like willingness of the Viet Cong and the US to fight. The focus on numbers even when they don’t tell the full story is called the McNamara Fallacy.

The documentary The Fog of War presents 11 lessons about what McNamara learned as a senior government official. The numbered list of lessons:

1) Empathize with your enemy; 2) Rationality alone will not save us; 3) There’s something beyond one’s self; 4) Maximize efficiency; 5) Proportionality should be a guideline in war; 6) Get the data; 7) Belief and seeing are both often wrong; 8) Be prepared to reexamine your reasoning; 9) In order to do good, you may have to engage in evil; 10) Never say never; 11) You can’t change human nature.

Many of those 11 lessons deal with issues of McNamara’s Fallacy, including at least numbers 1, 2, 4, 6, 7, and 8.

Easy, rather than meaningful measurements. If we need to measure something as a step toward our goals, we might choose what is easier to measure instead of what might be more helpful. Examples: a startup ecosystem tracking startup funding rounds (often publicly shared) rather than startup success (takes years and results are often private). The unemployment rate, which tracks how many people looking for work who have not found it, rather than how many have given up without working and how many have taken poorly paid jobs.

Vanity metrics. In startups we often talk about “vanity metrics” as being ones that look good but aren’t helpful. A vanity metric would be growth in users (rather than accompanying metrics around revenue or retention) or website visits when those visits may come from expensive ad buys.

For example, when Groupon was getting ready to IPO, they quickly hired thousands of sales people in China. The purpose was to increase their valuation at IPO, not to bring in more revenue in a new market. When potential investors saw that Groupon had a large China team, they thought the company would succeed.

Vanity metrics can occur anywhere. When some police departments started to track crime statistics, they also started to underreport certain crimes since the police were judged based on how many crimes occurred.

Surrogate metrics in health care. There’s a trade-off when measuring efficacy of a medicine. How long do we wait to prove results? If there are proxies for knowing whether a patient seems to be on the path to recovery, when do we choose the proxy rather than the actual outcome? As described in Time to Review the Role of Surrogate End Points in Health Policy:

“The Food and Drug Administration (FDA) in the United States and the European Medicines Agency (EMA), have a long tradition of licensing technologies solely on the basis of evidence of their effects on biomarkers or intermediate end points that act as so-called surrogate end points. The role of surrogates is becoming increasingly important in the context of programs initiated by the FDA and the EMA to offer accelerated approval to promising new medicines. The key rationale for the use of a surrogate end point is to predict the benefits of treatment in the absence of data on patient-relevant final outcomes. Evidence from surrogate end points may not only expedite the regulatory approval of new health technologies but also inform coverage and reimbursement decisions.”

Nudging. Nudges are little encouragements used by governments and businesses to change what individuals choose to do. Sometimes the nudge comes in the form of information about what other people do. For example, in the UK, tax compliance increased when people received a letter stating that “9 out of 10 people in your area are up to date with tax payments.”

But what if the goals of the nudges don’t consider other outcomes?

In “The Power of Suggestion: Inertia in 401(k) Participation and Savings Behavior” the authors show how changing default choices in employee retirement decisions resulted in more people choosing to save, but also resulted in more people continuing with default conservative money market investment choices.

The nudge increased the goal of higher employee savings compliance but also created a situation where more employees gave up higher returns they could have had from long-term equity investing.

Artistic and sports performances. Judged artistic competitions are scored in different ways and judging criteria sometimes change. Tim Ferriss realized that tango dance competitions ranked turns highly and so he did lots of turns to win, as a relative novice to other competitors. Something similar happened when Olympic skating changed to value the technical difficulty in each component of the skaters’ performance. As a result, checking off technical moves and less of the subjective artistic moves can leave performances less beautiful to watch.

Alpha Chimp. Metrics are a modern invention, but there are versions of them in other societies. In Jane Goodall’s book In the Shadow of Man, we learn of a low-ranking chimpanzee “Mike” who suddenly became alpha male. The top-ranked male was often the toughest chimpanzee in the group, a position backed up by size, intimidation, and what the rest of the group accepted. Mike was at the bottom of the adult male hierarchy (attacked by almost all the other males, last access to bananas). But Mike realized that he could use some empty oil cans that Goodall had left at her campsite in a new way. He ran through the group of chimpanzees banging the cans together. The other chimpanzees had never heard noise like that before and scattered. Maybe Mike proved that the top-ranked position, which should be based on who best leads the group, was actually based on who was scariest.

Four and More Types of Goodhart

One of the best papers digging into variations of the metric and goal problem is Categorizing Variants of Goodhart’s Law, by David Manheim and Scott Garrabrant. Their paper outlines four types of Goodhart’s Law and why they happen.

Regressional. When selecting a metric also selects a lot of noise. Example: choosing to do whatever the winners of “person of the year” or “best company” awards did. You might not see that the person was chosen to send a political message or that the company was manipulating numbers and will fall next year.

Extremal. This comes from out-of-sample projections. When our initial information is within a specific boundary we may still want to project what could happen out of the boundary. In those extreme cases, the relationship between the metric and the goal may break down.

Casual. Where the regulator (the intermediary between the proxy metric and the goal) causes the problem. For example, when pain became a 5th vital sign doctors were measured by their ability to make their patients more comfortable. If doctors start to prescribe pain medication too often or too easily they may increase addiction.

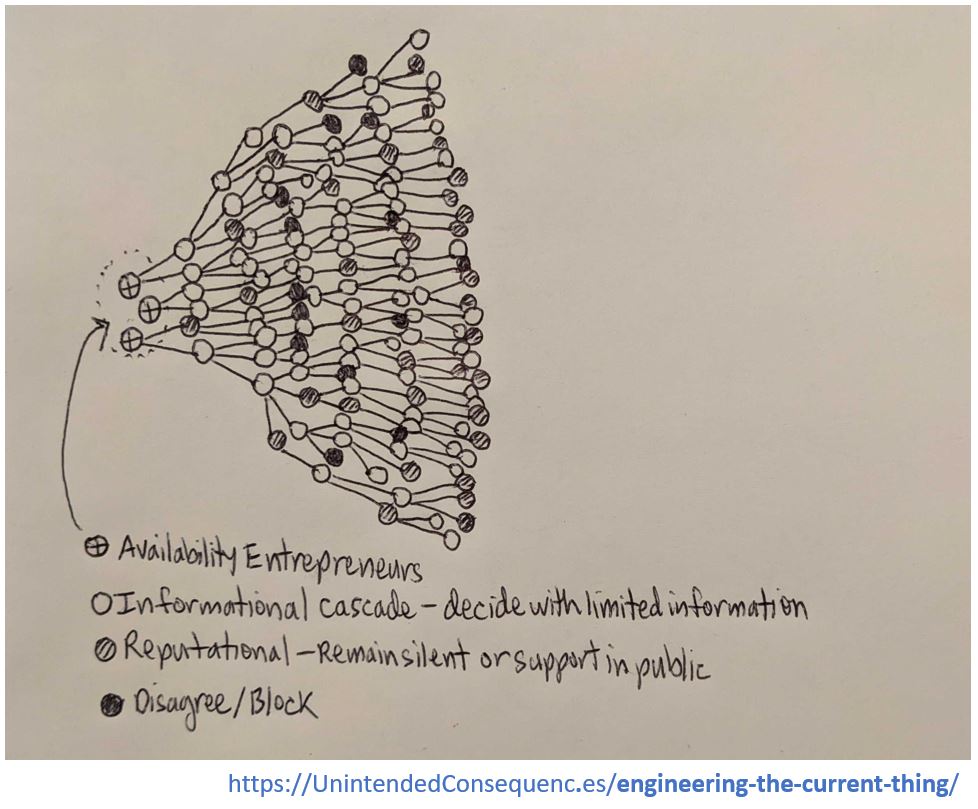

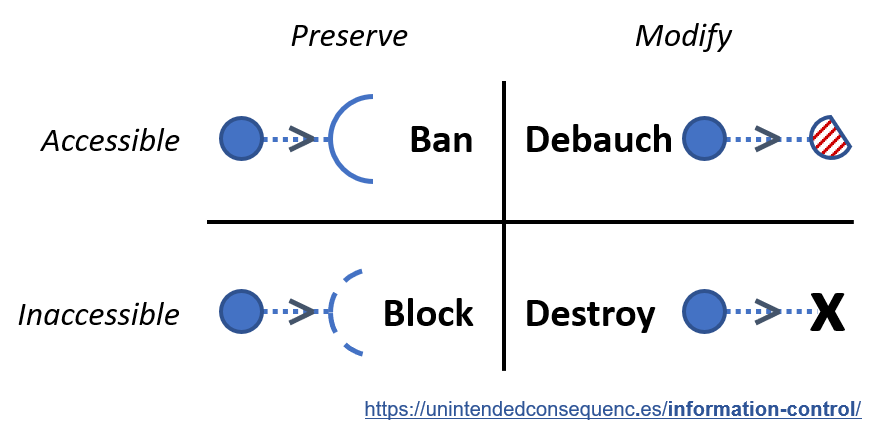

Adversarial. Where agents have different goals from the regulator and find a loophole that harms the goal. For example, colonial powers wanting to decrease the number of cobras in India or rats in Vietnam and paying a bounty for dead cobras or rat tails. People discovered that they could raise their own cobras to kill or cut off rat tails and release the rats. This is known as the Cobra Effect.

Beyond Manheim and Garrabrant’s four examples, others find that the law itself has other different forms.

Right vs Wrong. Noah Smith splits Goodhart’s Law into wrong and right versions:

“The ‘law’ actually comes in several forms, one of which seems clearly wrong, one of which seems clearly right. Here’s the wrong one:

“Any observed statistical regularity will tend to collapse once pressure is placed upon it for control purposes.

“That’s obviously false. An easy counterexample is the negative correlation between hand-washing and communicable disease. Before the government made laws to encourage hand-washing by food preparation workers (and to teach hand-washing in schools), there was a clear negative correlation between frequency of hand-washing in a population and the incidence of communicable disease in that population. Now the government has placed pressure on that regularity for control purposes, and the correlation still holds…”

And here’s Smith’s version of Goodhart’s Law that seems true to him:

“As soon as the government attempts to regulate any particular set of financial assets, these become unreliable as indicators of economic trends.

“This seems obviously true if you define ‘economic trends’ to mean economic factors other than the government’s actions. In fact, you don’t even need any kind of forward-looking expectations for this to be true; all you need is for the policy to be effective.”

My Summary. I just summarize Goodhart’s Law as coming from two places. One is the behavior change that occurs when people start trying to achieve a metric rather than a goal. The other origin are problems with the metrics being proxies for goals.

Post Goodhart

There are many cases of metrics being poor proxies for a goal. There are also many cases of people changing their behavior to meet metric targets rather than goals.

More awareness of Goodhart’s Law should hopefully lead to less cases of it, though maybe I’m too optimistic.

We also have many examples of the way we work, live, and play that are without measurement. We often measure haphazardly but survive or measure nothing and also survive.

Or are we actually making some subconscious measurements that we don’t recognize? If so, could those subconscious measurements be beyond Goodhart style effects?

Tracking metrics can fool us into seeing relationships that don’t exist.

Here are some ways we can try to avoid Goodhart’s Law:

- Be careful with situations where our morals skew the metrics we track. In Morals of the Moment I wrote about bad metric choices led to bad outcomes in forest fires, college enrollment, and biased hiring.

- Check for signs of vanity metrics (metrics that can only improve or which have weak ties to outcomes).

- Check whether we’re too process-focused (audit culture) rather than outcomes-focused.

- Regularly update metrics (and goals) as we see behavior change or find better ways to track progress toward a goal.

- Keep some metrics secret to avoid “self-defeating prophecies.”

- Use “counter metrics,” a concept from Julie Zhou. “For each success metric, come up with a good counter metric that would convince you that you’re not simply plugging one hole with another. (For example, a common counter metric for measuring an increase in production is also measuring the quality of each thing produced.)”

- Allow qualitative judgements that fall beyond numbers. This creates unintended consequences of its own, but can be a good check on our efforts. This is the difference between what Daniel Kahneman calls system one (quick intuition) and system two (slow, analytical, rational thinking). Is there room to act when a situation doesn’t feel right?

- Be more mindful where our choice of metric can impact many people (metrics that change outcomes for large groups should be studied carefully).

That we are even have Goodhart’s Law is a symptom of a more complex and connected society. In a more localized world there wouldn’t be as much of a problem with tracking metrics to achieve goals. Either because our impact would be local, or we just wouldn’t track things at all.

We also might not have an incentive to learn from Goodhart’s Law. Why try to change the way we set metrics if we are not the ones penalized? Why care about skewed outcomes if our timescale of measurement is short and Goodhart outcomes take a longer time?