A recent paper titled Bad Machines Corrupt Good Morals caught my attention. In the paper, the authors demonstrate that AI agents can act as influencers and enablers of bad human behavior. This is something we’ve known for a while, but I appreciate the author’s organization of the methods.

Specifically, the authors called out four types of decisions that an AI might participate in along with a human. (I think that there is one more grouping as well.) Here’s what the authors focused on:

-

- AI as an influencer in an advisory role. “Customers buy harmful products on the basis of recommender systems.”

- AI as an influencer in a role model role. “Online traders imitate manipulative market strategies of trading systems.”

- AI an an enabler in a partner role. “Students teaming up with NLG algorithms to create fake essays.”

- AI an an enabler in a delegate role. “Outsourcing online pricing leading to algorithmic trading.”

The inclusion of AI doesn’t change the framework of human behavior as much as it introduces new places in which humans may be influenced and enabled. This could have an impact on individual morals.

When it comes to morals, the easiest way to keep your own standard is to remove yourself from situations in which you are likely to be tempted. Likewise, you should not introduce others to situations in which they are likely to be tempted.

So the paper kicked off some other thinking for me. I wanted to reflect on ways we can think of more general influences on human behavior and where things might go from here.

Beyond bad behavior, what about good behavior that may be produced? Are we too critical of behavior influenced by an AI and not critical enough of behavior influenced by other people? What are the scale effects of such findings?

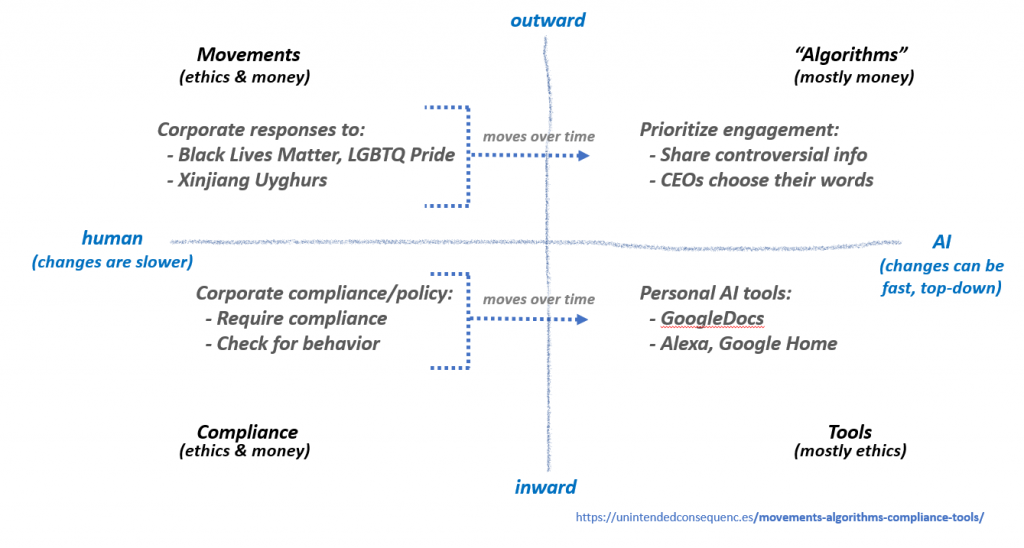

To look more broadly, let’s assess the way movements, algorithms, compliance, and tools impact behavior. Where I focus on humans I’m focusing on the way companies engage in these quadrants of behavior.

Movements

Movements are grassroots actions that may be leaderless. A movement’s purpose resonates with enough people (or pressures enough people) that it starts to affect personal choices.

When enough people make decisions based on belonging to a movement, they can affect the way company decision-makers make their own decisions. These decisions may be made for reasons of cost (people are boycotting the company because it does not support the movement) or for reasons of individual ethics (decision-makers feel strongly about the movement).

Some company decisions are superficial. Blacking out a logo in support of Black Lives Matter or using a rainbow of colors on a logo for LGBTQ Pride does check the box on supporting these movements, but logos are superficial. There is no real cost. Harder to change issues can be ignored. Being superficial can be a benefit for the companies that make changes like these. Plus, companies can restrict their logo changes to one geography. Notably, rainbow logos by international companies are not seen in their Middle East locations.

Some company decisions intersect with governmental desires and have true economic costs. For example, the decision of companies like H&M and Nike not to purchase cotton produced in Xinjiang (possibly made with Uyghur slave labor) resulted in being dropped from Chinese ecommerce sites. Because of the economic pressure, other international companies backtracked and reversed their purchasing policies, even to the point of promoting the use of Xinjiang cotton.

Humans control the Movement quadrant. I expect that some decisions like these transition to the Algorithm quadrant over time.

“Algorithms”

An algorithm is a set of rules that determine an output… which kind of describes a lot of the world. All of the four quadrants are algorithms of a sort. But here I’m focused on the popular usage of the word algorithm. For a while now, social media has sorted content on users feeds based on engagement rather than on publish time.

For example, algorithms often prioritize user engagement as a sign of interest. User engagement starts by seeing something notable. Engagement can come from controversial information, whether or not it is accurate. This can lead to a preponderance of content that is negative, aggressive, contradictory, pandering, or worse.

Based on user responses, an algorithm seeking engagement shows people more highly engaging content. It’s not that any content platform wants to draw people in to having a negative self image, believing in a flat earth, or the latest conspiracy theory. Those outcomes are what comes from open sharing and algorithms designed to seek engagement.

Algorithms also influence the way humans communicate. In CEOs, Students, and Algorithms, I wrote about the way CEOs alter their word choice to fit sentiment analysis used by algorithmic traders. I include this human example in the algorithm section since the human CEOs are selecting words by the way algorithmic traders judge the words.

“The algorithmic approach to reporting language still makes sense. Algorithmic traders just need to be a little more accurate and faster than the competition over many trades. The CEOs just need stock performance a little better than their peers.”

Compliance

Corporate compliance involves conforming to internal policies and procedures and checking for that conformity. (Friends who work in compliance, please forgive these simple paragraphs.)

Some corporate compliance activities come before the problematic behavior, for example through employee training. Other compliance activities are after the fact, for example identifying problematic behavior after it has taken place and then addressing it.

Here too I expect a shift to the right side of the chart so that tools rather than people, perform more compliance checks. A number of companies are trying to use employee activities to predict the likelihood of other undesirable behavior.

Tools

The biggest difference in AI tools is that they are widespread (a few billion deployed, depending on how you count) and that they can influence user behavior. Corporate choices in how the tools are designed influence that behavior. (Users hardly ever design their own AI tools.)

That means that choices made by people in the companies producing the tools have the opportunity to impact many individual users.

In Amazon’s Unintended Consequences I wrote about one unexpected way home assistants like Alexa can change user behavior. Alexa devices were set to detect frustration and react accordingly. But does doing that train humans to mistreat their tools? Or does it train humans to mistreat the people that their tools sound like? If the AI tools use female voices, does that impact the way women are treated? From Amazon’s Unintended Consequences:

“When people become frustrated with Alexa, or are just frustrated because they are having a bad day, Alexa responds with an apologetic voice.

“This is called ‘frustration detection’ by Amazon’s Alexa technology group. Frustration detection in other forms has been around for a long time, of course. The original frustration detection is people responding differently to another person’s frustration. Sometimes in an apologetic way and sometimes in an aggressive way depending on the relationship and personality of the respondent.”

A newer way tools can impact user behavior is the way GoogleDocs plans to recommend non-gendered words to its users (“chairperson” rather than “chairman,” for example). I take this as a decision based on ethics rather than economics. GoogleDocs is a free service and I doubt the cost or benefit will be a big deal for Google, a company whose revenues are over 80% from advertising.

I do wonder what the actual user impact will be. Will it just push people to whichever side they tend to lean toward? That is, people who want to use non-gendered words find the recommendations helpful and those who don’t want to change their language rebel in the other direction? While we might disagree on what good behavior is, we might want tools that could encourage it.

Choosing between pen and keyboard, paper and screen do influence the way you write. But those tools didn’t guide word choice in the same way as GoogleDocs could.

A 5th Group

Going back to the paper, the four ways that AI can impact human behavior reflects what humans do already. I thought another interesting way was one that the authors didn’t address — the way an AI can learn different things by observing the human and adjusting its responses accordingly.

A capability to track the same user across new situations, the speed with which the learning could take place, and the scale to which new learning could be deployed, form other surprising impacts that AI could have on human behavior. We’ll see where this takes us.